PUBLICATIONS

* Auto-refreshing list (might not be upto date)

Neither Here Nor There: Botanical (mis)Communication

Neither Here Nor There is a speculative installation exploring human perception of the natural world from the perspective of four plants. Probing the act of communication as well as the indeterminate gap between what is deemed human and non-human, the installation highlights the complex role that technology plays in our current understanding of the human. Standing in large pots around a meeting table, plants engage in a free roaming discussion about what they think it means to be human and about planetary politics. Key questions and terms are fed into GPT-3 (AI-based natural language text generator) and played as plant sounds. As the plants converse in this manner, no aspect of their conversation is intuitively legible to the viewer. Viewers are invited to use a decoding app on their cell phone that translates these plant sounds. In avoiding an obvious mode of human-centric representation, the installation places the viewer in unknown territory, asking them to consider what intelligence, communication, and cognition mean beyond the human perspective.

Harpreet Sareen, Lauria Clarke, and Yasuaki Kakehi. 2023. Neither Here Nor There: Botanical (mis)Communication. In Proceedings of the 15th Conference on Creativity and Cognition (C&C '23). Association for Computing Machinery, New York, NY, USA, 288–292. https://doi.org/10.1145/3591196.3596828

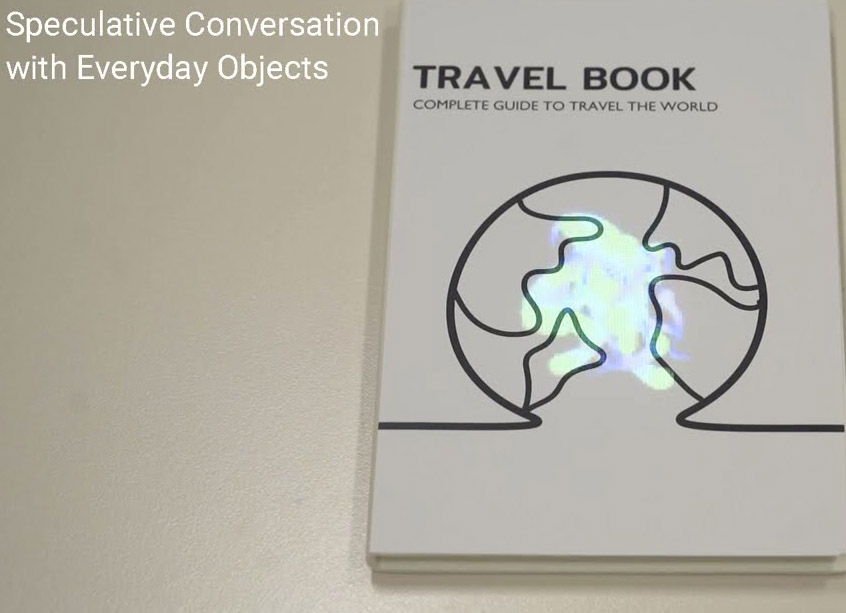

The Talk: Speculative Conversation with Everyday Objects

Communication between humans and everyday objects is often implicit. In this paper, we create speculative scenarios where users could have a conversation, as explicit communication, with everyday objects that usually may not have conversational artificial intelligence (AI) installed, such as a book. We present a design fiction narrative and a conversational system about conversations with everyday objects. Our user test showed a positive acceptance of conversation with everyday objects, and users tried to build a human-like relationship with them.

Keijiroh Nagano, Maya Georgieva, Harpreet Sareen, and Clarinda Mac Low. 2023. The Talk: Speculative Conversation with Everyday Objects. In ACM SIGGRAPH 2023 Posters (SIGGRAPH '23). Association for Computing Machinery, New York, NY, USA, Article 12, 1–2. https://doi.org/10.1145/3588028.3603643

Heliobots: Stem-Based Robots on Helianthus annuus for Directed Morphological Growth

This paper introduces a study demonstrating the growth of plants robotically, where robots live on the stem of a plant and morph their growth without external artificial scaffolding. The encoded software in the robot maintains interval locomotion over twelve weeks, while triggering on board lighting climbs to direct the growth. Outputs of twelve weeks of growth experiments in total are presented with the final shapes of plants. We also discuss the morphogenetic plasticity of plants and their growth responses to the environment, and other mechanisms of shaping that could be used in the future. Such work may pave a way towards new forms of arbortecture in the future.

Sareen, Harpreet, Yasuaki Kakehi, Pattie Maes and Joseph Jacobson. " Heliobots: Stem-Based Robots on Helianthus annuus for Directed Morphological Growth" In Human Centric: Proceedings of the 28th International Conference of the Association for Computer-Aided Architectural Design Research in Asia (CAADRIA) 2023. Mar 18-24 2023. pp 291-300; https://doi.org/10.52842/conf.caadria.2023.2.291

ephemera: Bubble Representations as Metaphors for Endangered Species

The effects of a hierarchical relationship of humans with non-humans are now more pronounced than ever. Anthropogenic ecological stressors, including high levels of carbon dioxide, water scarcity, habitat fragmentation have led to disruption of climate systems, in turn endangering many local and global species. ephemera is an installation composed of glass vessels that show bubble images representing animals from all continents and ecologies currently under threat as per the IUCN Red list. These self-assembling bubble pictures, formed by nucleation of CO2 bubbles in water, are in a homeostasis at the beginning of the installation and shrink each hour to eventually disappear in a few days. The tension between the present endangerment and the urgency of the future action, manifests in the shrinking of these bubbles, invoking unnatural ephemerality due to human effect. Fauna pictures in this installation, composed of carbon dioxide bubbles, symbolize the transitoriness of now threatened species.

Harpreet Sareen, Yibo Fu, Yasuaki Kakehi. “ephemera: Bubble Representations as Metaphors for Endangered Species”. Proceedings of the ISEA 2023: 28th International Symposium on Electronic Art, Paris, May 15-22 2023.

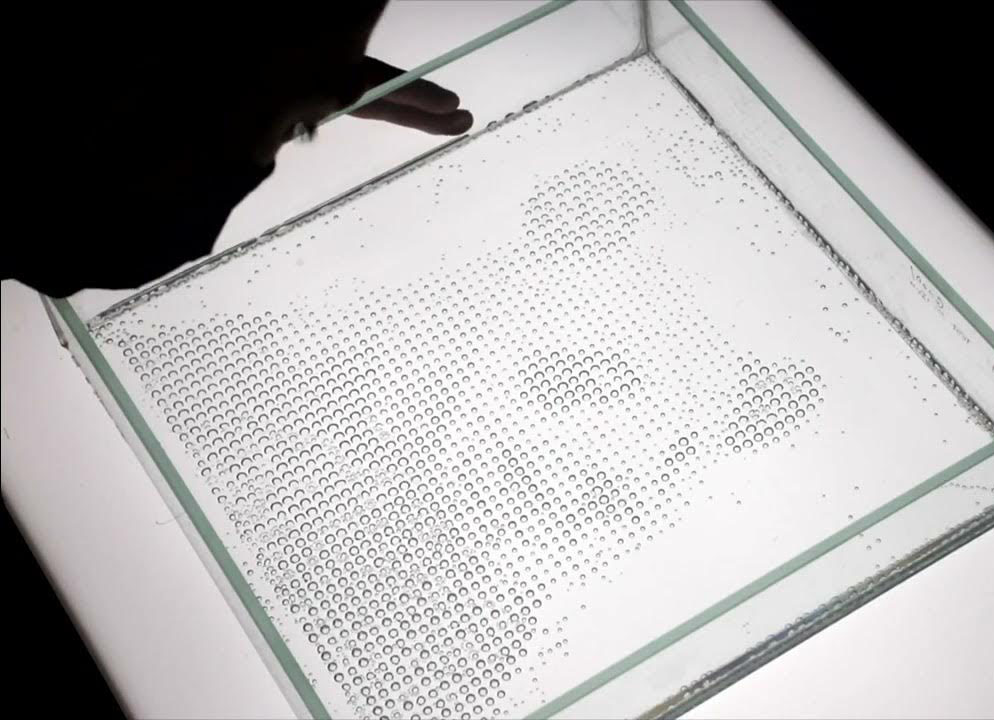

BubbleTex: Designing Heterogenous Wettable Areas for Carbonation Bubble Patterns on Surfaces

We present a new fabrication technique that enables control of CO2 bubble positions and their size within carbonated liquids. Instead of soap bubbles, boiling water, or droplets, we show creation of patterns, images and text through sessile bubbles that exhibit a lifetime of several days. Surfaces with mixed wettability regions are created on glass and plastic using ceramic coatings or plasma projection leading to patterns that are relatively invisible to the human eye. Different regions react to liquids differently. Nucleation is activated after carbonated liquid is poured onto the surface with bubbles nucleating in hydrophobic regions with a strong adherence to the surface and can be controlled in size ranging from 0.5mm – 6.5mm. Bubbles go from initially popping or becoming buoyant during CO2 supersaturation to stabilizing at their positions within minutes. Our design software allows users to import images and convert them into parametric pixelation forms conducive to fabrication that will result in nucleation of bubbles at required positions. Through this work, we enable the use of carbonation bubbles as a new design material for designers and researchers.

Harpreet Sareen, Yibo Fu, Nour Boulahcen, and Yasuaki Kakehi. 2023. BubbleTex: Designing Heterogenous Wettable Areas for Carbonation Bubble Patterns on Surfaces. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (CHI '23). Association for Computing Machinery, New York, NY, USA, Article 421, 1–15.

Plantae Agrestis: Distributed, Self-organizing Cybernetic Plants In A Botanical Conservatory

Conventional digital logic embodied in silicon based materials has long neglected the capabilities of natural systems. This work seeks to embody a new hybrid form portraying the analogy in chemical and electrical signaling. Through an installation at a botanical garden, technological functions in this setting are rele-gated plant controls. A number of plants are interfaced individually through their own internal signals with robotic extensions, and are left to self-organize in a botanical conservatory. We recognize the emergent behavior to consider the implications to bioelectronics models, materials and processes.

Harpreet Sareen, Yasuaki Kakehi; Plantae Agrestis: Distributed, Self-Organizing Cybernetic Plants in a Botanical Conservatory. Leonardo 2023; 56 (1): 41–42.

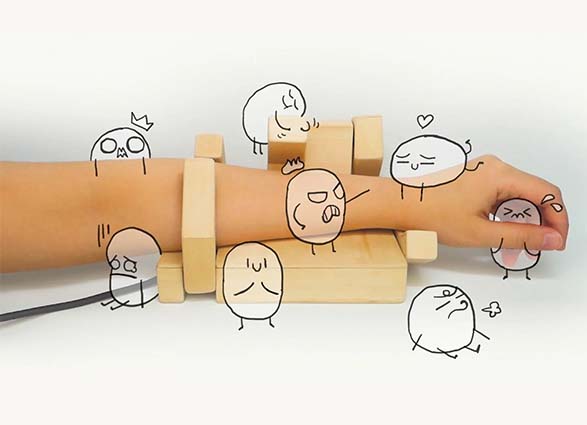

TactorBots: A Haptic Design Toolkit for Out-of-lab Exploration of Emotional Robotic Touch

Emerging research has demonstrated the viability of emotional communication through haptic technology inspired by interpersonal touch. However, the meaning-making of artificial touch remains ambiguous and contextual. We see this ambiguity caused by robotic touch’s "otherness" as an opportunity for exploring alternatives. To empower emotional haptic design in longitudinal out-of-lab exploration, we devise TactorBots, a design toolkit consisting of eight wearable hardware modules for rendering robotic touch gestures controlled by a web-based software application. We deployed TactorBots to thirteen designers and researchers to validate its functionality, characterize its design experience, and analyze what, how, and why alternative perceptions, practices, contexts, and metaphors would emerge in the experiment. We provide suggestions for designing future toolkits and field studies based on our experiences. Reflecting on the findings, we derive design implications for further enhancing the ambiguity and shifting the mindsets to expand the design space.

Ran Zhou, Zachary Schwemler, Akshay Baweja, Harpreet Sareen, Casey Lee Hunt, and Daniel Leithinger. 2023. TactorBots: A Haptic Design Toolkit for Out-of-lab Exploration of Emotional Robotic Touch. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (CHI '23). Association for Computing Machinery, New York, NY, USA, Article 370, 1–19. https://doi.org/10.1145/3544548.3580799

Demonstrating TactorBots: A Haptic Design Toolkit for Exploration of Emotional Robotic Touch

Emerging research has demonstrated the viability of emotional communication through haptic technology inspired by interpersonal touch. However, the meaning-making of artificial touch remains ambiguous and contextual. We see this ambiguity caused by robotic touch’s "otherness" as an opportunity for exploring alternatives. To empower designers to explore emotional robotic touch, we devise TactorBots. It contains eight plug-and-play wearable tactor modules that render a series of social gestures driven by servo motors. Our specialized web GUI allows easy control, modification, and storage of tactile patterns to support fast prototyping. Taking emotional haptics as a "design canvas" with broad opportunities, TactorBots is the first "playground" for designers to try out various tactile sensations, feel around the nuanced connection between touches and emotions, and come up with creative imaginations.

Ran Zhou, Zachary Schwemler, Akshay Baweja, Harpreet Sareen, Casey Lee Hunt, and Daniel Leithinger. 2023. Demonstrating TactorBots: A Haptic Design Toolkit for Exploration of Emotional Robotic Touch. Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery, New York, NY, USA, Article 438, 1–5.

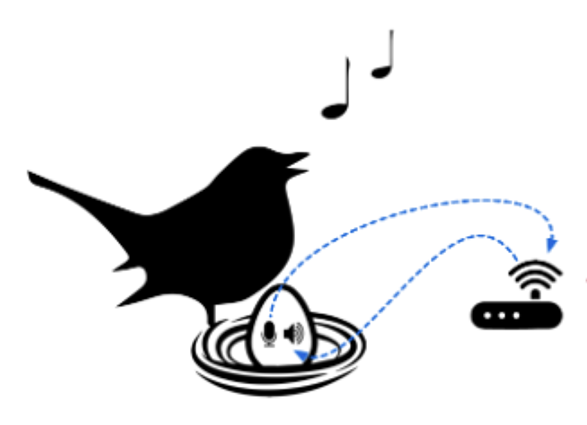

TamagoPhone: A Framework for Augmenting Artificial Incubators to Enable Vocal Interaction Between Bird Parents and Eggs

For some precocial bird species, vocal interactions occur pre-hatching between parent and embryo within the egg. Such prenatal sensory experiences may affect development and have long-term conse- quences on postnatal behavior. Although artificial incubators in- crease survival rate, they prevent vocal communication, potentially hindering the development of species identity and behaviors. This work introduces a research design predicated on the review of rele- vant literature, aimed at new applications for animal management. In the nest, the real egg is replaced by an aug- mented “dummy” egg, embedded with a microphone and speaker. We present our motivations, followed by an overview of the system and a series of considerations in terms of engineering, design, species-specific and evaluation requirements. Finally we elaborate on different application contexts such as research, farming, and preservation

Rebecca Kleinberger, Megha Vemuri, Janelle Sands, Harpreet Sareen, Janet M. Baker. 2022 (Upcoming). TamagoPhone: A Framework for Augmenting Artificial Incubators to Enable Vocal Interaction Between Bird Parents and Eggs. In Ninth International Conference on Animal-Computer Interaction 5-8 December 2022, hosted by Northumbria University, Newcastle-upon-Tyne, UK.

Algaphon: Transducing Human Input to Photosynthetic Radiation Parameters in Algae Timescale

One of the biggest challenges of our time is for humans to really understand how does a human action propagate through another complex natural system? What is the difference between human and ecological time? Al-gaphon is an online and offline installation wherein algae bubbles that ring at minnaert frequency near algal filaments are rendered audible through a hydrophone. The installation comprises of aquariums in Tokyo, New York and Linz, each with different species of algae. The aquarium lighting is connected to participatory action -- Online visitors leave a voice dialog that is translated into photosynthetically active radiation (PAR) varia-tions in a remote aquarium. The algae bubble response to this human speech is then recorded and emailed back to the visitor for them to engage in a reflective dialog with algal species

Harpreet Sareen, Franziska Mack, Yasuaki Kakehi. 2022. Algaphon: Transducing Human Input to Photosynthetic Radiation Parameters in Algae Timescale. In International Symposium of Electronic Arts (ISEA '22). Barcelona, Spain.

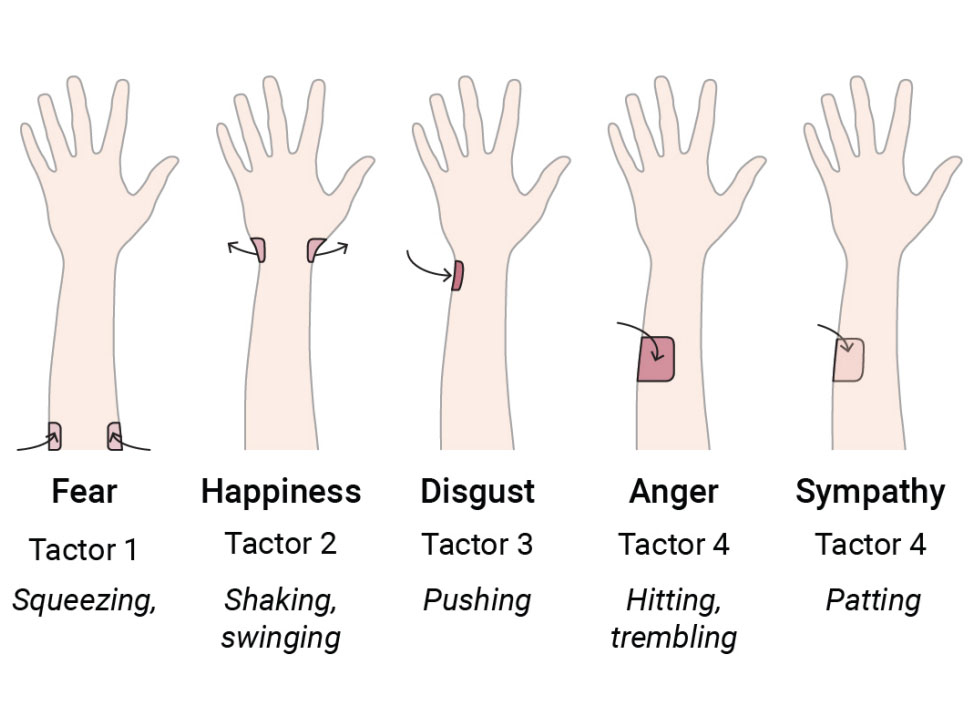

EmotiTactor: Exploring How Designers Approach Emotional Robotic Touch

In this work, we bring designers into the exploration of emotional robotic touch, discuss their design decisions and reflect on their insights. Prior psychology findings show humans can communicate distinct emotions solely through touch. We hypothesize that similar effects might also be applicable to robotic touch. To enable designers to easily generate and modify various types of affective touch for conveying emotions (e.g., anger, happiness, etc.), we developed a platform consisting of a robotic tactor interface and a software design tool. When conducting an elicitation study with eleven interaction designers, we discovered common patterns in their generated tactile sensations for each emotion. We also illustrate the strategies, metaphors, and reactions that the designers deployed in the design process. Our findings uncover that the “otherness” of robotic touch broadens the design possibilities of emotional communication beyond mimicking interpersonal touch.

Ran Zhou, Harpreet Sareen, Yufei Zhang, and Daniel Leithinger. 2022. EmotiTactor: Exploring How Designers Approach Emotional Robotic Touch. In Designing Interactive Systems Conference (DIS '22). Association for Computing Machinery, New York, NY, USA, 1330–1344. https://doi.org/10.1145/3532106.3533487

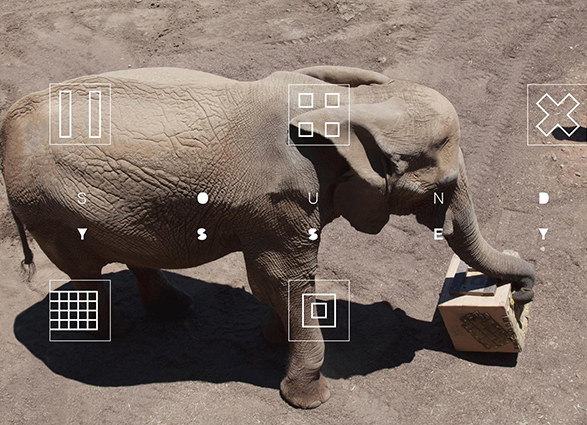

Soundyssey: Hybrid Enrichment System for Elephants in Managed Care

Animals in managed care ideally are provided with environmental stimuli for their psychological and physiological well-being. Most commonly, food-based enrichment methods are used to mimic wild conditions, allowing animals to search and forage. However, many of these devices fall short in providing choice and control to animals, an important factor in cognitive engagement. We present an active auditory enrichment device for elephants that encourages play behaviors through embodied interaction. Our preliminary results indicate that elephants understood the system and interacted with the interface significantly longer than with passive objects. During the interactions, elephants showed more positive behaviors such as focused exploration, reducing the possibility of negative stereotypical behaviors. We suggest that such technologically-enhanced objects and embodied design can enhance standards of managed animal care.

Wu Yating, Sareen Harpreet, Miller Gabriel. “Soundyssey: Hybrid Enrichment System for Elephants in Managed Care” 2021. Proceedings of the Fifteenth International Conference on Tangible, Embedded and Embodied Interaction (TEI'21), Onlin, February 14-19, 2014. Association for Computing Machinery, 2021.

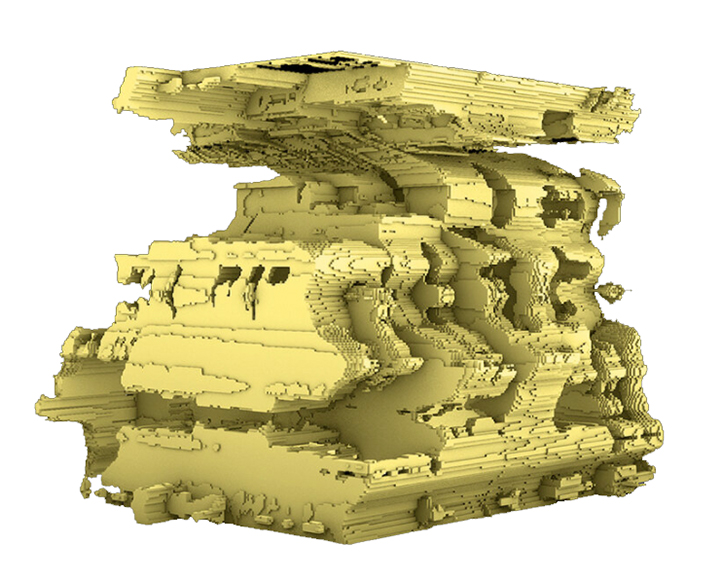

Machinic Interpolations: A GAN Pipeline for Integrating Lateral Thinking in Computational Tools of Architecture

In this paper, we discuss a new tool pipeline that aims to re-integrate lateral thinking strategies in computational tools of architecture. We present a 4-step AI-driven pipeline, based on Generative Adversarial Networks (GANs), that draws from the ability to access the latent space of a machine and use this space as a digital design environment. We demonstrate examples of navigating in this space using vector arithmetic and interpolations as a method to generate a series of images that are then translated to 3D voxel structures. Through a gallery of forms, we show how this series of techniques could result in unexpected spaces and outputs beyond what could be produced by human capability alone.

Asmar, Karen El; Sareen, Harpreet; "Machinic Interpolations: A GAN Pipeline for Integrating Lateral Thinking in Computational Tools of Architecture", p. 60-66 . In: Congreso SIGraDi 2020. São Paulo: Blucher, 2020. DOI 10.5151/sigradi2020-9

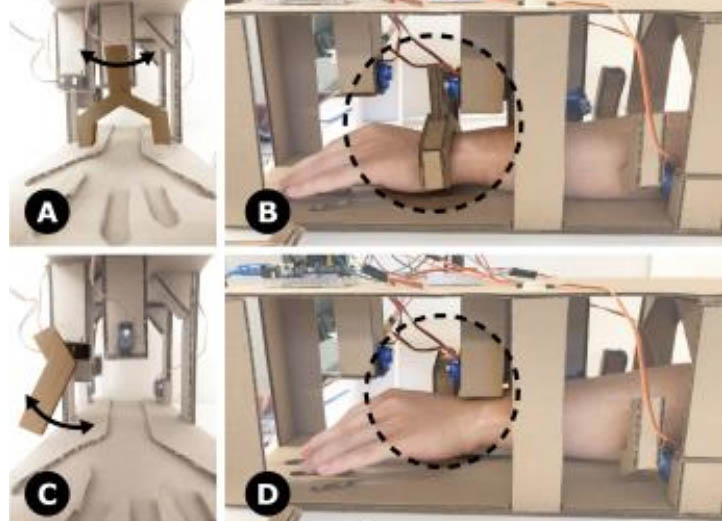

HexTouch: Affective Robot Touch for Complementary Interactions to Companion Agents in Virtual Reality

There is a growing need for social interaction in Virtual Reality (VR). Current social VR applications enable human-agent or interpersonal communication, usually by means of visual and audio cues. Touch, which is also an essential method for affective communication, has not received as much attention. To address this, we introduce HexTouch, a forearm-mounted robot that performs touch behaviors in sync with the behaviors of a companion agent, to complement visual and auditory feedback in virtual reality. The robot consists of four robotic tactors driven by servo motors, which render specific tactile patterns to communicate primary emotions (fear, happiness, disgust, anger, and sympathy). We demonstrate HexTouch through a VR game with physical-virtual agent interactions that facilitate the player-companion relationship and increase the immersion of the VR experience.

Zhou, Ran, Yanzhe Wu, and Harpreet Sareen. "HexTouch: Affective Robot Touch for Complementary Interactions to Companion Agents in Virtual Reality." 26th ACM Symposium on Virtual Reality Software and Technology. 2020.

HexTouch: A Wearable Haptic Robot for ComplementaryInteractions to Companion Agents in Virtual Reality

We propose a forearm-mounted robot that performs complementary touches in relation to the behaviors of a companion agent in virtual reality (VR). The robot consists of a series of tactors driven by servo motors that render specific tactile patterns to communicate primary emotions (fear, happiness, disgust, anger, and sympathy) and other notification cues. We showcase this through a VR game with physical-virtual agent interactions that facilitate the player-companion relationship and increase user immersion in specific scenarios. The player collaborates with the agent to complete a mission while receiving affective haptic cues with the potential to enhance sociality in the virtual world.

Ran Zhou, Yanzhe Wu, and Harpreet Sareen. 2020. HexTouch: A Wearable Haptic Robot for Complementary Interactions to Companion Agents in Virtual Reality. In SIGGRAPH Asia 2020 Emerging Technologies (SA '20). Association for Computing Machinery, New York, NY, USA, Article 8, 1–2

EmotiTactor: Emotional Expression of Robotic Physical Contact

The study of affective communication through robots has primarily been focused on facial expression and vocal interaction. However, communication between robots and humans can be significantly enriched through haptics. In being able to improve the relationships of robotic artifacts with humans, we posed a design question - What if the robots had the ability to express their emotions to humans via physical touch? We created a robotic tactor (tactile organ) interface that performs haptic stimulations on the forearm. We modified timing, movement, and touch of tactors on the forearm to create a palate of primary emotions. Through a preliminary case study, our results indicate a varied success in individuals being able to decode the primary emotions through robotic touch alone.

Zhou, Ran, and Harpreet Sareen. "EmotiTactor: Emotional Expression of Robotic Physical Contact." Companion Publication of the 2020 ACM on Designing Interactive Systems Conference. 2020.

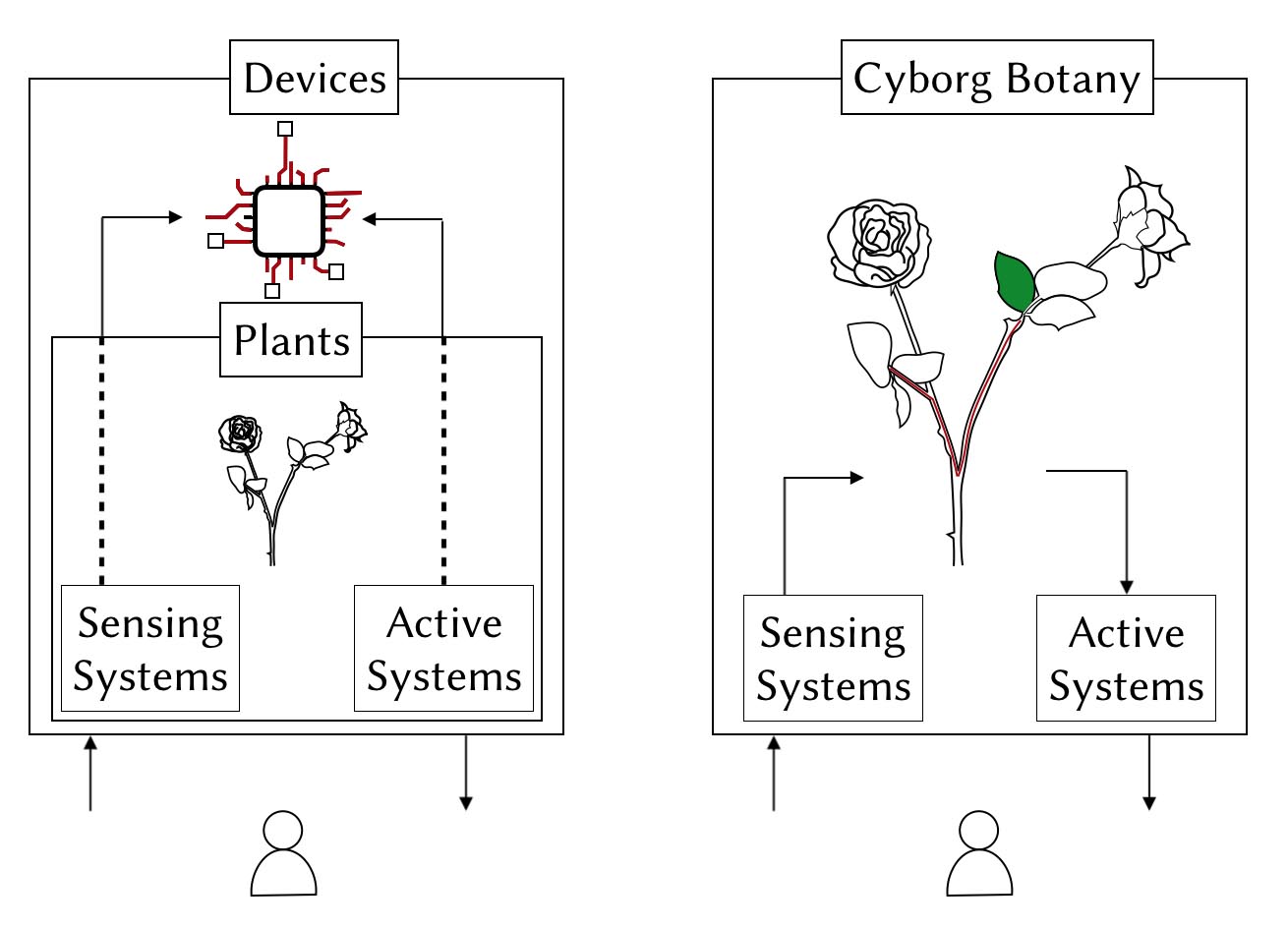

Cyborg Botany: Exploring In-Planta Cybernetic Systems for Interaction

Our traditional interaction possibilities have centered around our electronic devices. In recent years, the progress in electronics and material science has enabled us go beyond chip layer and work at the substrate level. This has helped us rethink form, sources of power, hosts and in turn new interaction possibilities. However, the design of such devices has mostly been ground up and fully synthetic. We discuss the analogy between artificial functions and natural capabilities in plants. Through two case studies, we demonstrate bridging unique natural operations of plants with the digital world. Our goal is to make use of sensing and expressive abilities of nature for our interaction devices. Merging synthetic circuitry with plant's own physiology could pave a way to make these lifeforms responsive to our interactions and their ubiquitous sustainable deployment.

Sareen H and Maes P. "Cyborg Botany: Exploring In-Planta Cybernetic Systems for Interaction." Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems. ACM, 2019. Glasgow, Scotland. May 4-9 2019.

Cyborg Botany: Augmented Plants as Sensors, Displays and Actuators

The nature has myriad plant organisms, many of them carrying unique sensing and expression abilities. Plants can sense the environment, other living entities and regenerate, actuate or grow in response. Our interaction mechanisms and communication channels with such organisms in nature are subtle, unlike our interaction with digital devices. We propose a new convergent view of interaction design in nature by merging and powering our electronic functionalities with existing biological functions of plants.

Sareen, Harpreet, Jiefu Zheng, and Pattie Maes. "Cyborg botany: augmented plants as sensors, displays and actuators." Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems. 2019.

FaceDisplay: Towards Asymmetric Multi-User Interaction for Nomadic Virtual Reality

Mobile VR HMDs enable scenarios where they are being used in public, excluding all the people in the surrounding (Non-HMD Users) and reducing them to be sole bystanders. We present FaceDisplay, a modified VR HMD consisting of three touch sensitive displays and a depth camera attached to its back. People in the surrounding can perceive the virtual world through the displays and interact with the HMD user via touch or gestures. To further explore the design space of FaceDisplay, we implemented three applications (FruitSlicer, SpaceFace and Conductor) each presenting different sets of aspects of the asymmetric co-located interaction (e.g. gestures vs touch). We conducted an exploratory user study (n=16), observing pairs of people experiencing two of the applications and showing a high level of enjoyment and social interaction with and without an HMD.

Gugenheimer, J., Stemasov, E., Sareen, H., & Rukzio, E. March 2018. A Demonstration of FaceDisplay: Asymmetric Multi-User Interaction for Mobile VR. In 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) pp. 753-754. IEEE.

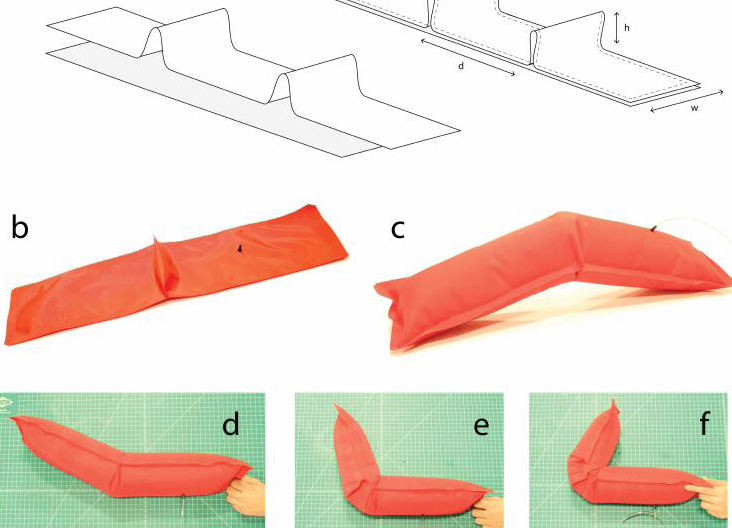

Printflatables: Printing human-scale, functional and dynamic inflatable objects

Printflatables is a design and fabrication system for humanscale, functional and dynamic inflatable objects. We use inextensible thermoplastic fabric as the raw material with the key principle of introducing folds and thermal sealing. Upon inflation, the sealed object takes the expected three dimensional shape. The workflow begins with the user specifying an intended 3D model which is decomposed to two dimensional fabrication geometry. This forms the input for a numerically controlled thermal contact iron that seals layers of thermoplastic fabric. In this paper, we discuss the system design in detail, the pneumatic primitives that this technique enables and merits of being able to make large, functional and dynamic pneumatic artifacts. We demonstrate the design output through multiple objects which could motivate fabrication of inflatable media and pressure-based interfaces.

Sareen Harpreet, Udayan Umapathi, Patrick Shin, Yasuaki Kakehi, Jifei Ou, Pattie Maes, Hiroshi Ishii. May 2017. Printflatables: Printing Human-scale, Functional and Dynamic Inflatable Objects. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems Denver, US, May 6-11, 2017. CHI ’17. ACM New York, NY.

A Demonstration of FaceDisplay: Asymetric Multi-User Interaction for Mobile VR

Mobile VR HMDs enable users to experience virtual reality content in a variety of nomadic scenarios, excluding all the people in the surrounding (Non-HMD Users) and reducing them to be sole bystanders. This leads to a scenario where the HMD User experiences a sense of isolation and the Non-HMD Users a sense of exclusion. To battle these phenomena we present FaceDisplay, a modified VR HMD consisting of three touch sensitive displays and a depth camera attached to its back. This allows Non-HMD User to see inside the immersed users virtual world and enable them to interact via touch and gestures. We built a VR HMD prototype consisting of three additional screens and present interaction techniques and an example application that leverage the FaceDisplay design space.

Gugenheimer, Jan, et al. "A Demonstration of FaceDisplay: Asymetric Multi-User Interaction for Mobile VR." 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR). IEEE, 2018.

Body-Borne Computers as Extensions of Self

The opportunities for wearable technologies go well beyond always-available information displays or health sensing devices. The concept of the cyborg introduced by Clynes and Kline, along with works in various fields of research and the arts, offers a vision of what technology integrated with the body can offer. This paper identifies different categories of research aimed at augmenting humans. The paper specifically focuses on three areas of augmentation of the human body and its sensorimotor capabilities: physical morphology, skin display, and somatosensory extension. We discuss how such digital extensions relate to the malleable nature of our self-image. We argue that body-borne devices are no longer simply functional apparatus, but offer a direct interplay with the mind. Finally, we also showcase some of our own projects in this area and shed light on future challenges.

Leigh, Sang-won, Harpreet Sareen, Hsin-Liu Cindy Kao, Xin Liu, and Pattie Maes. "Body-Borne Computers as Extensions of Self." Computers 6, no. 1 2017: 12

FaceDisplay: Enabling Multi-User Interaction for Mobile Virtual Reality

We present FaceDisplay, a multi-display mobile virtual reality (VR) head mounted display (HMD), designed to enable non-HMD users to perceive and interact with the virtual world of the HMD user. Mobile VR HMDs offer the ability to immerse oneself wherever and whenever the user wishes to. This enables application scenarios in which users can interact with VR in public places. However, this results in excluding all the people in the surrounding without an HMD to become sole bystanders and onlookers. We propose FaceDisplay, a multi-display mobile VR HMD, allowing by-standers to see inside the immersed users virtual world and enable them to interact via touch. We built a prototype consisting of three additional screens and present interaction techniques and an example application that leverage the FaceDisplay design space.

Gugenheimer J., Stemasov E., Sareen H, Rukzio E. May 2017. FaceDisplay: Enabling Multi-User Interaction for Mobile Virtual Reality. Demo Paper. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. Denver, US, May 6-11, 2017. CHI 17. ACM New York, NY.

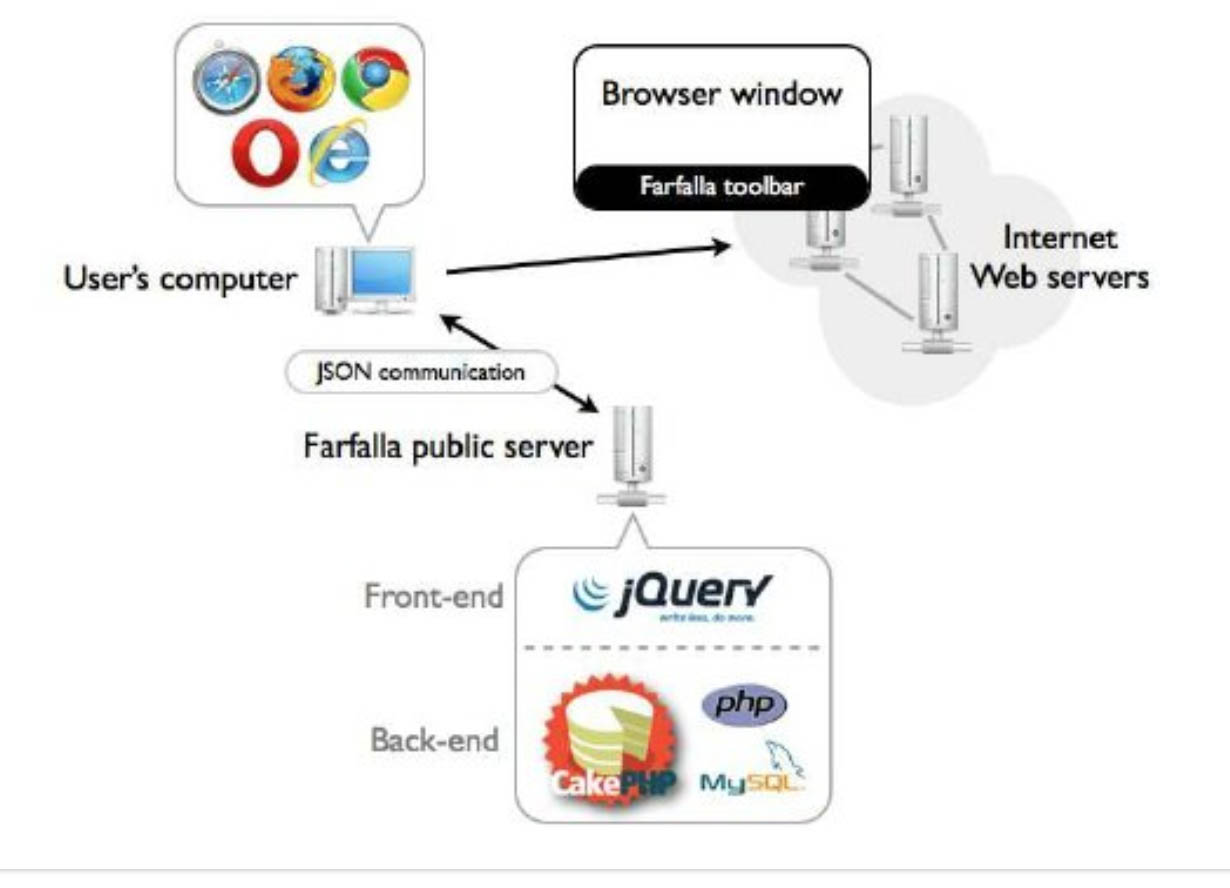

Farfalla project: browser-based accessibility solutions

The aim of this project is to create an all-embracing web framework to raise the accessibility level of websites and to provide users with novel methods for browsing with fewer difficulties. Granting accessibility requires efforts on three main levels: standards definition, accessibility evaluation and AT tools development, not necessarily in this order. Standards are necessary for optimizing the whole process, but they are not enough for granting real accessibility [6]. Evaluating Web Accessibility is a quite difficult and time consuming task, especially if we are interested in evaluation through time [2]; such a task can be carried out with many distinct techniques [4, 1, 3]. AT solutions, on the other hand, can be very useful both for accessing information and for participating in everyday life matters, which are the two major ‘functionalities’ offered by the World Wide Web. Computer-based AT solutions often suffer from the fact of being deeply linked to a particular device or architecture.

Mangiatordi, Andrea, and Harpreet Singh Sareen. "Farfalla project: browser-based accessibility solutions." Proceedings of the International Cross-Disciplinary Conference on Web Accessibility. 2011.

ACADEMIC REVIEWING

Editorial Boards

Nature Humanities and Social Sciences (Science, Technology and Society)

Program Committees/Chairing

2023 ACM DIS (Designing Interactive Systems) PC Member

2023 ACM CHI (Conference on Human Factors in Computing Systems) PC Member LBW Track

2023 ACM IMX (Conference on Interactive Media Experiences) PC Member

2022 ACM CHI (Conference on Human Factors in Computing Systems) PC Member LBW Track

2021 IEEE ISMAR (International Symposium on Mixed and Augmented Reality) PC Member

2020 ACM DIS (Designing Interactive Systems) PC Member

2020 ACM PerDis (International Symposium on Pervasive Displays) PC Member

2019 DesForm (Design and Semantics of Form and Movement) Program Committee

2018 ACM CHI (Conference on Human Factors in Computing Systems) Session Chair

Reviews

2023 ACM TEI (Tangible and Embodied Interaction)

2023 ACM TOCHI Journal Reviewing

2022 ACM IDC (Interaction Design for Children)

2021 Leonardo LABS

2021 ACM CHI (Conference on Human Factors in Computing Systems)

2020 MDPI Applied Sciences

2020 Leonardo/ISAST Journal

2020 ACM TEI (Tangible and Embodied Interaction)

2020 ACM Mobile HCI (Conference on Human-Computer Interaction with Mobile Devices)

2020 IEEE ISMAR (International Symposium on Mixed and Augmented Reality)

2020 ACM DIS (Designing Interactive Systems) DIS Papers/Pictorials

2020 ACM IDC (Interaction Design for Children)

2020 ACM UIST (Symposium on User Interface Software and Technology)

2019 ACM TEI (Tangible and Embodied Interaction)

2019 JoVE (Journal of Visualized Experiments)

2019 MDPI Sensors

2019 ACM CHI (Conference on Human Factors in Computing Systems)

2018 ACM CHI (Conference on Human Factors in Computing Systems)

2017 ACM CHI (Conference on Human Factors in Computing Systems)

2016 ACM CHI (Conference on Human Factors in Computing Systems)

2016 ACM UIST (Symposium on User Interface Software and Technology)

2016 ACM AH (Augmented Human International Conference)

2012 SV International Conference on Recent Advances and Future Trends in Information Technology

COPYRIGHT © 2020